Updates

May 2022 – Quantum computers are expected to break modern public key cryptography owing to Shor’s algorithm. As a result, these cryptosystems need to be replaced by quantum-resistant algorithms, also known as post-quantum cryptography (PQC) algorithms.

April 2022 – Digital currency – the ultimate invasion. It is only a matter of time. And while we wait, the FBI conducts warrantless searches of millions.

June 2020 – Because of the racial bias of facial recognition algorithms, the major players are shutting down their technology – a short lived victory no doubt. Amazon, for example, only committed for a one-year hiatus.

Technological advances will make this page obsolete each time I update it.

ACLU sues Clearview AI – I applaud the action, but disagree on the claim of “vulnerable communities uniquely harmed.” It threatens everyone’s privacy and impinges upon everyone’s liberty. That should be the basis of the complaint.

Stealthwear to obscure your heat signature

Makeup and fashion to deceive facial recognition?

____________________________

Protective Arts: I always intended for the name to encompass more than physical skills. Personal protection is a means to enhance your personal liberty. And at its American core liberty means – You have the right to be left alone.[1]

Big data is a great convenience and the greatest threat to our liberty.

SciFi explored the challenges decades earlier. There are numerous examples to cite, but I gravitate to the precognition problem in The Minorty Report and the danger of prescience explored in the Dune saga.

In the theatrical version of The Minority Report (2002), there is a frightening scene where Tom Cruise is running from the precog police, only to have facial recognition and big data marketing sell him goods. The impossibility of anonymity. We are pursued by marketers pitching to our preferences. Extrapolate from this and predictive behavioral analysis is not a far stretch, which leads to conclusion that precognition is possible. Philip K. Dick saw this danger in 1956 – where the state training and control of people with precognitive abilities is used as a tool of control and coercion – for our own protection.[2]

In Dune it is prescience that trapped humanity and the God Emperor spends millennia breeding the antidote to prevent humanity from ever being subject to predetermination: A genetic assurance of free will.

Do not easily dismiss the hyperbole of science fiction [3] – big data already is being used to influence human choice, behavior, and beliefs. Sufficient data and refined algorithms could indeed predict future actions. Precognition and predetermination.

The premise of these authors is that essential to human freedom is the right of self determination and the thornier challenge of individual anonymity. Yet, we now happily trade our data for “free” services, convenience, and (increasingly) our “protection.” And we do so in order to blather mundane food choices and other inane nothings for anyone and everyone on social media.

And as our preferences, purchases, comings and goings are tracked, collected and analyzed, we vaingloriously provide the means to better control us. For the poly-sci crowd, consider Wright’s (2018) argument in Foreign Affairs that AI will reshape the world order (copied below).[4]

But the trade offs are all too easy. Despite my protesting, there is an Alexa in my living room. My family willingly allowed a spy device to easily order toilet paper and play music. I gave up – capitulated to the convenience – indeed it is The Age of Surveillance Capitalism!

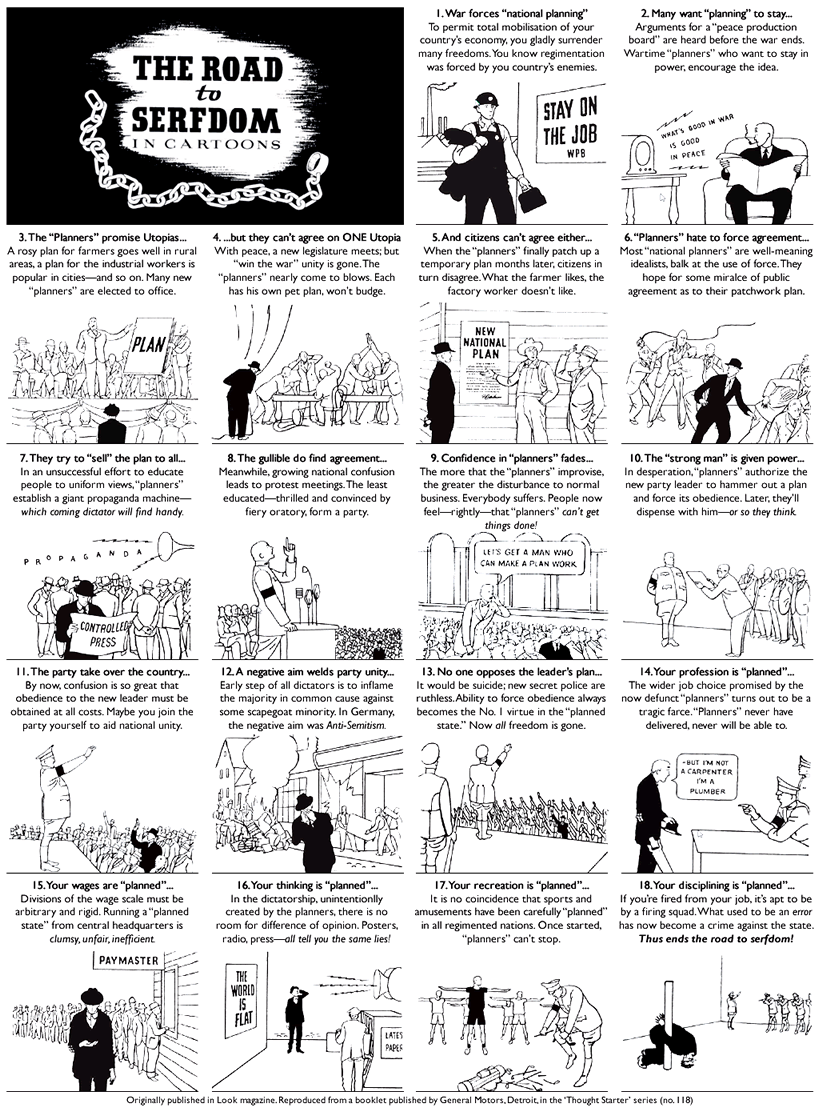

This is a more insidious form of control that we faced in the past. Authoritarian structures temporarily may dupe its citizenry, but are too obvious a form of coercion. People ultimately resist. Orwell and Hayek remain instructive [5]. Now the means of control is truly benign – we do in fact get free stuff, greater convenience, and merely by clicking “I accept” on every privacy notice. What choice did we have?

Precisely. What choice do we have?

I am entirely culpable. I participate. Shit, I am posting this to be data mined. But the first step in solving a problem is knowing that it exists. We all have a problem. Frankly I am not sure there is a viable solution.

Some suggestions. First be aware. Privacy matters. This should be part of your general situational awareness – maintain condition Yellow. Be skeptical.

Erase your digital tracks when you leave your job.

Invest in Privacy Eye wear. But how will you board airplanes?

Use cash (but read >this< for the contrarian view).

Support the Ludwig Von Mises Institute

And yet does it matter given that private surveillance is for sale?

And do you really think Hoover is alone in this abuse of power?

And now we are paying to give our genetic data to the FBI?

And a more naked approach – we will buy homes pre-wired to gather data about us.

While I would love to aspire to a total off-grid, Jack Reacher drifter invisibility, it is simply unrealistic for most of us. But at the very least: pay attention. Technology is never neutral. It will always be used, by people and for their purpose and benefit. Their benefit, not yours. Make sure you know to what end. Make it part of your protective arts.

_________________

[1] American Liberty – a brilliant if sardonic interpretation is >here< The Gambler (2014) and Fuck You Money. The Europeans are legislatively better at this than we Americans with the Right to be Forgotten.

Federation of Free States as an interesting thought experiment – and a good blog to peruse in general.

[2] NYCPD is cutting edge. And what governments have done for your protection is amazingly invasive – the Patriot Act anyone? Or the amusingly named Regulation of Investigatory Powers Act (RIP, 2000) for our British cousins.

And facial recognition – DMV records contain photos of the vast majority of a state’s residents, most of whom have never been charged with a crime. Unreasonable search? It will be interesting to watch how case law develops.

Not a list you want your city to make: The World’s Most Surveilled Cities

[3] V For Vendetta is now almost a period piece. Alan Moore started the graphic novel in 1982 in part because of Margaret Thatcher’s conservative government which he assumed would lose power in 1983. Ha! The demos of sheep prevailed and The Iron Lady went on to become the longest-serving British prime minister of the 20th century. Moore’s influences were the predictable dystopian authors, but Orwell most strongly. 1984’s omnipresent Big Brother and the mass surveillance in V is the same means of invasion of privacy giving the state command and control. There is a direct homage in the theatrical versions by casting John Hurt (R.I.P) as Adam Sutler in V For Vendetta (2005) and as Winston Smith in 1984 (1984). At least along his career he progressed from the oppressed to the oppressor.

Mention Orwell’s 1984 (1940) and Huxley‘s Brave New World (1932) should spring to mind. If you need inspiration to re-read them both, >here< is a brilliant debate on which dystopia are we living in. The very fact that the debate is relevant and leaves one wondering which book best describes our current experience is sickening.

_________________

Anyone remember Sen. Russ Feingold, the ONLY Senator to vote against the Patriot Act? His speech is worth a read. The House was far better – voting 357 to 66 – and with Ron Paul one of the three Republicans against. And it was renewed in 2019, and amended in 2020. Sure, government is there for You!

_________________

[4] How Artificial Intelligence Will Reshape the Global Order:

The Coming Competition Between Digital Authoritarianism and Liberal Democracy

By Nicholas Wright July 10, 2018

The debate over the effects of artificial intelligence has been dominated by two themes. One is the fear of a singularity, an event in which an AI exceeds human intelligence and escapes human control, with possibly disastrous consequences. The other is the worry that a new industrial revolution will allow machines to disrupt and replace humans in every—or almost every—area of society, from transport to the military to healthcare.

There is also a third way in which AI promises to reshape the world. By allowing governments to monitor, understand, and control their citizens far more closely than ever before, AI will offer authoritarian countries a plausible alternative to liberal democracy, the first since the end of the Cold War. That will spark renewed international competition between social systems.

For decades, most political theorists have believed that liberal democracy offers the only path to sustained economic success. Either governments could repress their people and remain poor or liberate them and reap the economic benefits. Some repressive countries managed to grow their economies for a time, but in the long run authoritarianism always meant stagnation. AI promises to upend that dichotomy. It offers a plausible way for big, economically advanced countries to make their citizens rich while maintaining control over them.

Some countries are already moving in this direction. China has begun to construct a digital authoritarian state by using surveillance and machine learning tools to control restive populations, and by creating what it calls a “social credit system.” Several like-minded countries have begun to buy or emulate Chinese systems. Just as competition between liberal democratic, fascist, and communist social systems defined much of the twentieth century, so the struggle between liberal democracy and digital authoritarianism is set to define the twenty-first.

DIGITAL AUTHORITARIANISM

New technologies will enable high levels of social control at a reasonable cost. Governments will be able selectively censor topics and behaviors to allow information for economically productive activities to flow freely, while curbing political discussions that might damage the regime. China’s so-called Great Firewall provides an early demonstration of this kind of selective censorship.

As well as retroactively censoring speech, AI and big data will allow predictive control of potential dissenters. This will resemble Amazon or Google’s consumer targeting but will be much more effective, as authoritarian governments will be able to draw on data in ways that are not allowed in liberal democracies. Amazon and Google have access only to data from some accounts and devices; an AI designed for social control will draw data from the multiplicity of devices someone interacts with during their daily life. And even more important, authoritarian regimes will have no compunction about combining such data with information from tax returns, medical records, criminal records, sexual-health clinics, bank statements, genetic screenings, physical information (such as location, biometrics, and CCTV monitoring using facial recognition software), and information gleaned from family and friends. AI is as good as the data it has access to. Unfortunately, the quantity and quality of data available to governments on every citizen will prove excellent for training AI systems.

Even the mere existence of this kind of predictive control will help authoritarians. Self-censorship was perhaps the East German Stasi’s most important disciplinary mechanism. AI will make the tactic dramatically more effective. People will know that the omnipresent monitoring of their physical and digital activities will be used to predict undesired behavior, even actions they are merely contemplating. From a technical perspective, such predictions are no different from using AI health-care systems to predict diseases in seemingly healthy people before their symptoms show.

In order to prevent the system from making negative predictions, many people will begin to mimic the behaviors of a “responsible” member of society. These may be as subtle as how long one’s eyes look at different elements on a phone screen. This will improve social control not only by forcing people to act in certain ways, but also by changing the way they think. A central finding in the cognitive science of influence is that making people perform behaviors can change their attitudes and lead to self-reinforcing habits. Making people expound a position makes them more likely to support it, a technique used by the Chinese on U.S. prisoners of war during the Korean War. Salespeople know that getting a potential customer to perform small behaviors can change attitudes to later, bigger requests. More than 60 years of laboratory and fieldwork have shown humans’ remarkable capacity to rationalize their behaviors.

As well as more effective control, AI also promises better central economic planning. As Jack Ma, the founder of the Chinese tech titan Alibaba, argues, with enough information, central authorities can direct the economy by planning and predicting market forces. Rather than slow, inflexible, one-size-fits-all plans, AI promises rapid and detailed responses to customers’ needs.

There’s no guarantee that this kind of digital authoritarianism will work in the long run, but it may not need to, as long as it is a plausible model for which some countries can aim. That will be enough to spark a new ideological competition. If governments start to see digital authoritarianism as a viable alternative to liberal democracy, they will feel no pressure to liberalize. Even if the model fails in the end, attempts to implement it could last for a long time. Communist and fascist models collapsed only after thorough attempts to implement them failed in the real world.

CREATING AND EXPORTING AN ALL-SEEING STATE

No matter how useful a system of social control might prove to a regime, building one would not be easy. Big IT projects are notoriously hard to pull off. They require high levels of coordination, generous funding, and plenty of expertise. For a sense of whether such a system is feasible, it’s worth looking to China, the most important non-Western country that might build one.

China has proved that it can deliver huge, society-spanning IT projects, such as the Great Firewall. It also has the funding to build major new systems. Last year, the country’s internal security budget was at least $196 billion, a 12 percent increase from 2016. Much of the jump was probably driven by the need for new big data platforms. China also has expertise in AI. Chinese companies are global leaders in AI research and Chinese software engineers often beat their American counterparts in international competitions. Finally, technologies, such as smartphones, that are already widespread can form the backbone of a personal monitoring system. Smartphone ownership rates in China are nearing those in the West and in some areas, such as mobile payments, China is the world leader.

China is already building the core components of a digital authoritarian system. The Great Firewall is sophisticated and well established, and it has tightened over the past year. Freedom House, a think tank, rates China the world’s worst abuser of Internet freedom. China is implementing extensive surveillance in the physical world, as well. In 2014, it announced a social credit scheme, which will compute an integrated grade that reflects the quality of every citizen’s conduct, as understood by the government. The development of China’s surveillance state has gone furthest in Xinjiang Province, where it is being used to monitor and control the Muslim Uighur population. Those whom the system deems unsafe are shut out of everyday life; many are even sent to reeducation centers. If Beijing wants, it could roll out the system nationwide.

To be sure, ability is not the same as intention. But China seems to be moving toward authoritarianism and away from any suggestion of liberalization. The government clearly believes that AI and big data will do much to enable this new direction. China’s 2017 AI Development Plan describes how the ability to predict and “grasp group cognition” means “AI brings new opportunities for social construction.”

Digital authoritarianism is not confined to China. Beijing is exporting its model.The Great Firewall approach to the Internet has spread to Thailand and Vietnam. According to news reports, Chinese experts have provided support for government censors in Sri Lanka and supplied surveillance or censorship equipment to Ethiopia, Iran, Russia, Zambia, and Zimbabwe. Earlier this year, the Chinese AI firm Yitu sold “wearable cameras with artificial intelligence-powered facial-recognition technology” to Malaysian law enforcement.

More broadly, China and Russia have pushed back against the U.S. conception of a free, borderless, and global Internet. China uses its diplomatic and market power to influence global technical standards and normalize the idea that domestic governments should control the Internet in ways that sharply limit individual freedom. After reportedly heated competition for influence over a new forum that will set international standards for AI, the United States secured the secretariat, which helps guide the group’s decisions, while Beijing hosted its first meeting, this April, and Wael Diab, a senior director at Huawei, secured the chairmanship of the committee. To the governments that employ them, these measures may seem defensive—necessary to ensure domestic control—but other governments may perceive them as tantamount to attacks on their way of life.

THE DEMOCRATIC RESPONSE

The rise of an authoritarian technological model of governance could, perhaps counterintuitively, rejuvenate liberal democracies.How liberal democracies respond to AI’s challenges and opportunities depends partly on how they deal with them internally and partly on how they deal with the authoritarian alternative externally. In both cases, grounds exist for guarded optimism.

Internally, although established democracies will need to make concerted efforts to manage the rise of new technologies, the challenges aren’t obviously greater than those democracies have overcome before. One big reason for optimism is path dependence. Countries with strong traditions of individual liberty will likely go in one direction with new technology; those without them will likely go another. Strong forces within U.S. society have long pushed back against domestic government mass surveillance programs, albeit with variable success. In the early years of this century, for example, the Defense Advanced Research Projects Agency began to construct “Total Information Awareness” domestic surveillance systems to bring together medical, financial, physical and other data. Opposition from media and civil liberties groups led Congress to defund the program, although it left some workarounds hidden from the public at the time. Most citizens in liberal democracies acknowledge the need for espionage abroad and domestic counterterrorism surveillance, but powerful checks and balances constrain the state’s security apparatus. Those checks and balances are under attack today and need fortification, but this will be more a repeat of past efforts than a fundamentally new challenge.

In the West, governments are not the only ones to pose a threat to individual freedoms. Oligopolistic technology companies are concentrating power by gobbling up competitors and lobbying governments to enact favorable regulations. Yet societies have overcome this challenge before, after past technological revolutions. Think of U.S. President Theodore Roosevelt’s trust-busting, AT&T’s breakup in the 1980s, and the limits that regulators put on Microsoft during the Internet’s rise in the 1990s.

Digital giants are also hurting media diversity and support for public interest content as well as creating a Wild West in political advertising. But previously radical new technologies, such as radio and television, posed similar problems and societies rose to the challenge. In the end, regulation will likely catch up with the new definitions of “media” and “publisher” created by the Internet. Facebook Chief Executive Mark Zuckerberg resisted labeling political advertising in the same way as is required on television, until political pressure forced his hand last year.

Liberal democracies are unlikely to be won over to digital authoritarianism. Recent polling suggests that a declining proportion in Western societies view democracy as “essential,” but this is a long way from a genuine weakening of Western democracy.

The external challenge of a new authoritarian competitor may perhaps strengthen liberal democracies. The human tendency to frame competition in us versus them terms may lead Western countries to define their attitudes to censorship and surveillance at least partly in opposition to the new competition. Most people find the nitty-gritty of data policy boring and pay little attention to the risks of surveillance. But when these issues underpin a dystopian regime in the real world they will prove neither boring nor abstract. Governments and technology firms in liberal democracies will have to explain how they are different.

LESSONS FOR THE WEST

The West can do very little to change the trajectory of a country as capable and confident as China. Digital authoritarian states will likely be around for a while. To compete with them, liberal democracies will need clear strategies. First, governments and societies should rigorously limit domestic surveillance and manipulation. Technology giants should be broken up and regulated. Governments need to ensure a diverse, healthy media environment, for instance by ensuring that overmighty gatekeepers such as Facebook do not reduce media plurality; funding public service broadcasting; and updating the regulations covering political advertising to fit the online world. They should enact laws preventing technology firms from exploiting other sources of personal data, such as medical records, on their customers and should radically curtail data collection from across the multiplicity of platforms with which people come into contact. Even governments should be banned from using such data except in a few circumstances, such as counterterrorism operations.

Second, Western countries should work to influence how states that are neither solidly democratic nor solidly authoritarian implement AI and big data systems. They should provide aid to develop states’ physical and regulatory infrastructure and use the access provided by that aid to prevent governments from using joined-up data. They should promote international norms that respect individual privacy as well as state sovereignty. And they should demarcate the use of AI and metadata for legitimate national security purposes from its use in suppressing human rights.

Finally, Western countries must prepare to push back against the digital authoritarian heartland. Vast AI systems will prove vulnerable to disruption, although as regimes come to rely ever more on them for security, governments will have to take care that tit-for-tat cycles of retribution don’t spiral out of control. Systems that selectively censor communications will enable economic creativity but will also inevitably reveal the outside world. Winning the contest with digital authoritarian governments will not be impossible—as long as liberal democracies can summon the necessary political will to join the struggle.

______________________

[5] Hayek – the abridged graphic novel version – his arguments are more subtle so read the original works:

Self government requires self discipline. The current political vogue is to demand more of government for the betterment of all. At our peril do we forget the lessons of history: regulation to regimentation to tyranny.

And this 1994 prediction by Edward Luttwak.

And if you think it cannot happen here – pay attention and remain vigilant!

One thought on “Privacy”